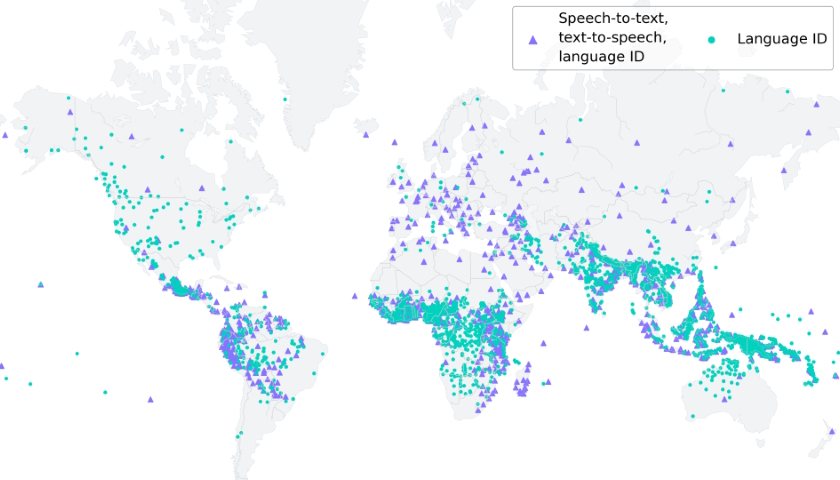

Meta’s looking to help more users in more regions maximize connectivity and accessibility, by open sourcing its Massively Multilingual Speech (MMS) models, which are already able to translate over 1,100 languages and dialects from around the world.

As explained by Meta:

“Many of the world’s languages are in danger of disappearing, and the limitations of current speech recognition and generation technology will only accelerate this trend. We want to make it easier for people to access information and use devices in their preferred language, and today we’re announcing a series of artificial intelligence (AI) models that could help them do just that.”

Meta says that its MMS models can also identify more than 4,000 spoken languages, providing significant capacity to connect and integrate more regions in future.

Meta’s MMS models will enable others within the research community to build on its translation capacity, which will see more languages added into the dataset, enabling them to live on in new forms.

That not only serves an important archival value, but it will also ensure greater connectivity as new technologies reach new regions, and more people look to use these new tools to interact.

It’s an important step for speech recognition services, and preservation tools that can provide a window into history. And as we move into a new era, where people are increasingly speaking or typing things into existence, inclusion will also be a critical element in facilitating such on a global scale.

If generative AI becomes as pervasive as predicted, this will indeed be critical, which is why Meta’s open source announcement is so significant.

Read more: socialmediatoday.com